Retrieval of Out-of-Distribution Objects

Retrieval System for Ouf-of-Distribution Objects

Retrieval System for Ouf-of-Distribution Objects

Short description of portfolio item number 2

Method for Detecting Ouf-of-Distribution Objects Without Retraining

Published in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2024

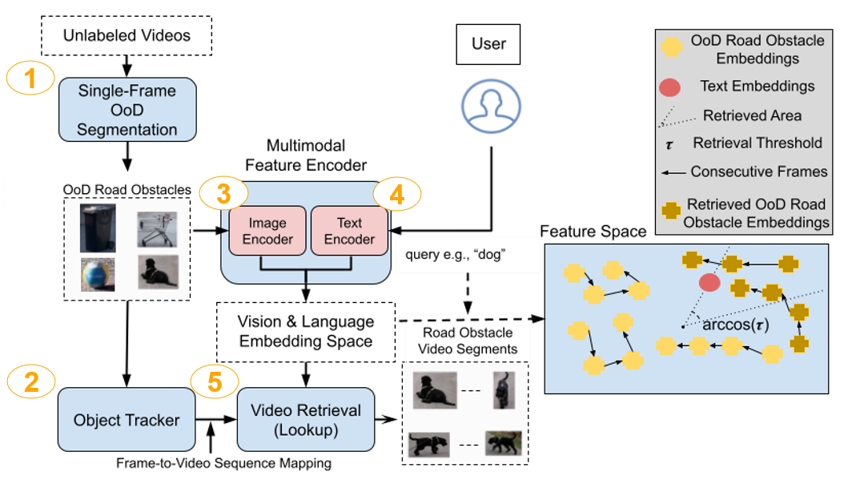

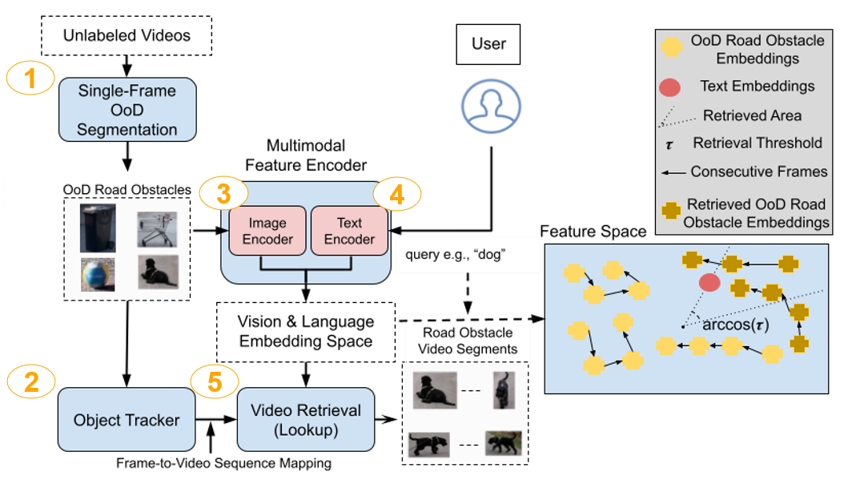

In the life cycle of highly automated systems operating in an open and dynamic environment, the ability to adjust to emerging challenges is crucial. For systems integrating data-driven AI-based components, rapid responses to deployment issues require fast access to related data for testing and reconfiguration. In the context of automated driving, this especially applies to road obstacles not included in the training data, commonly referred to as out-ofdistribution (OoD) road obstacles. Given the availability of large uncurated driving scene recordings, a pragmatic approach is to query a database to retrieve similar scenarios featuring the same safety concerns due to OoD road obstacles. In this work, we extend beyond identifying OoD road obstacles in video streams and offer a comprehensive approach to extract sequences of OoD road obstacles using text queries, thereby proposing a way of curating a collection of OoD data for subsequent analysis. Our proposed method leverages the recent advances in OoD segmentation and multi-modal foundation models to identify and efficiently extract safety-relevant scenes from unlabeled videos. We present a first approach for the novel task of text-based OoD object retrieval, which addresses the question “Have we ever encountered this before?”

Recommended citation: Shoeb, Y., Chan, R., Schwalbe, G., Nowzad, A., Güney, F. & Gottschalk, H. (2024). "Have We Ever Encountered This Before? Retrieving Out-of-Distribution Road Obstacles from Driving Scenes." Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).

Download Paper | Download Slides

Published in International Journal of Computer Vision, 2024

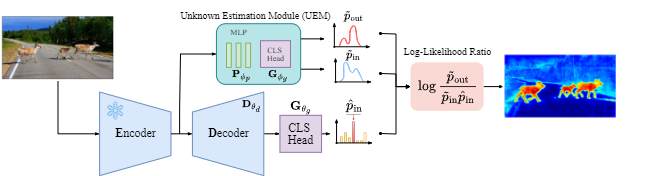

Addressing the Out-of-Distribution (OoD) segmentation task is a prerequisite for perception systems operating in an open-world environment. Large foundational models are frequently used in downstream tasks, however, their potential for OoD remains mostly unexplored. We seek to leverage a large foundational model to achieve robust representation. Outlier supervision is a widely used strategy for improving OoD detection of the existing segmentation networks. However, current approaches for outlier supervision involve retraining parts of the original network, which is typically disruptive to the model’s learned feature representation. Furthermore, retraining becomes infeasible in the case of large foundational models. Our goal is to retrain for outlier segmentation without compromising the strong representation space of the foundational model. To this end, we propose an adaptive, lightweight unknown estimation module (UEM) for outlier supervision that significantly enhances the OoD segmentation performance without affecting the learned feature representation of the original network. UEM learns a distribution for outliers and a generic distribution for known classes. Using the learned distributions, we propose a likelihood-ratio-based outlier scoring function that fuses the confidence of UEM with that of the pixel-wise segmentation inlier network to detect unknown objects. We also propose an objective to optimize this score directly. Our approach achieves a new state-of-the-art across multiple datasets, outperforming the previous best method by 5.74% average precision points while having a lower false-positive rate. Importantly, strong inlier performance remains unaffected.

Recommended citation: Nayal, N.*, Shoeb, Y.*, & Güney, F. (2024). A Likelihood Ratio-Based Approach to Segmenting Unknown Objects." International Journal of Computer Vision (IJCV).

Download Paper | Download Slides

Published in The 35th British Machine Vision Conference (BMVC), 2024

For real-world applications, deep neural networks (DNNs) must recognize and adapt to previously unseen inputs and changing environments. To achieve this, we propose a novel method to augment DNNs with the capability to identify and incrementally learn novel classes that were not present in their initial training set. Our approach uses anomaly detection to retrieve out-of-distribution (OoD) samples as potential candidates for new classes and uses k empty classes to learn these novel classes incrementally in an unsupervised fashion. We introduce two loss functions, which 1) encourage the DNN to allocate OoD samples to the new empty classes and 2) minimize the inner-class feature distance between the newly formed classes. Unlike previous approaches that rely on labeled data for each class, our model uses a single label for all OoD data and a precomputed distance matrix to differentiate between them. Our experiments across image classification and semantic segmentation tasks show our method’s ability to expand a DNN’s understanding by several classes without requiring explicit ground truth labels.

Recommended citation: Uhlemeyer, S., Lienen, J., Shoeb, Y., Hüllermeier, E. and Gottschalk, H. (2024). " Unsupervised Class Incremental Learning using Empty Classes " Proceedings of British Machine Vision Conference Workshops.

Download Paper | Download Slides

Published in 36th IEEE Intelligent Vehicles Symposium (IV), 2025

Advances in machine learning methods for computer vision tasks have led to their consideration for safety-critical applications like autonomous driving. However, effectively integrating these methods into the automotive development lifecycle remains challenging. Since the performance of machine learning algorithms relies heavily on the training data provided, the data and model development lifecycle play a key role in successfully integrating these components into the product development lifecycle. Existing models frequently encounter difficulties recognizing or adapting to novel instances not present in the original training dataset. This poses a significant risk for reliable deployment in dynamic environments. To address this challenge, we propose an adaptive neural network architecture and an iterative development framework that enables users to efficiently incorporate previously unknown objects into the current perception system. Our approach builds on continuous learning, emphasizing the necessity of dynamic updates to reflect real-world deployment conditions. Specifically, we introduce a pipeline with three key components: (1) a scalable network extension strategy to integrate new classes while preserving existing performance, (2) a dynamic OoD detection component that requires no additional retraining for newly added classes, and (3) a retrieval-based data augmentation process tailored for safety-critical deployments. The integration of these components establishes a pragmatic and adaptive pipeline for the continuous evolution of perception systems in the context of autonomous driving.

Recommended citation: Shoeb, Y., Nowzad, A. & Gottschalk, H. (2025). "Adaptive Neural Networks for Intelligent Data-Driven Development " IEEE Intelligent Vehicles Symposium (IV).

Download Paper | Download Slides

Published in 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 2025

Detecting road obstacles is essential for autonomous vehicles to navigate dynamic and complex traffic environments safely. Current road obstacle detection methods typically assign a score to each pixel and apply a threshold to generate final predictions. However, selecting an appropriate threshold is challenging, and the per-pixel classification approach often leads to fragmented predictions with numerous false positives. In this work, we propose a novel method that leverages segment-level features from visual foundation models and likelihood ratios to predict road obstacles directly. By focusing on segments rather than individual pixels, our approach enhances detection accuracy, reduces false positives, and offers increased robustness to scene variability. We benchmark our approach against existing methods on the RoadObstacle and LostAndFound datasets, achieving state-of-the-art performance without needing a predefined threshold.

Recommended citation: Shoeb, Y., Nayal, N., Nowzad, A., Güney, F. and Gottschalk, H. (2025). " Segment-Level Road Obstacle Detection Using Visual Foundation Model Priors and Likelihood Ratios " Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications - Volume 2: VISAPP.

Download Paper | Download Slides

Published in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2025

In this paper, we review the state of the art in Out-of-Distribution (OoD) segmentation, with a focus on road obstacle detection in automated driving as a real-world application. We analyse the performance of existing methods on two widely used benchmarks, SegmentMeIfYouCan Obstacle Track and LostAndFound-NoKnown, highlighting their strengths, limitations, and real-world applicability. Additionally, we discuss key challenges and outline potential research directions to advance the field. Our goal is to provide researchers and practitioners with a comprehensive perspective on the current landscape of OoD segmentation and to foster further advancements toward safer and more reliable autonomous driving systems.

Recommended citation: Shoeb, Y., Nowzad, A. & Gottschalk, H. (2025). "Out-of-Distribution Segmentation in Autonomous Driving: Problems and State of the Art " Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops.

Download Paper | Download Slides

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.